A/B testing refers to comparing two (or more) variants of a landing page to see which one performs better. A properly designed testing process is able to provide you with invaluable data insights on what tweaks applied to your pages can give you the most out of your digital marketing campaigns. Exactly as it worked for one of the former US presidents…

Yeah, a few slight changes to Barrack Obama’s splash page during the US presidential campaign in 2008 improved the signup rate by 40.6%, which translated into an additional $57 million in donations! Earlier, of course, his marketing staff performed a series of tests to select the optimal layout variant (D. Siroker & P. Koomen, The most powerful way to turn clicks into customers, 2013).

This clearly explains why in 2024 60% of businesses test their landing pages, as reported by InvespCRO.

In this post, I examine the landing page testing definition, key steps, useful tools and most interesting real case studies, along with pro-tips and common mistakes to avoid. Let’s begin!

Key Takeaways:

- A/B testing identifies the most effective landing page layout by confronting two or more alternatives.

- Testing different landing page elements can lead to improved user experience and higher conversions.

- Data-driven insights from A/B testing enhance marketing effectiveness.

- Landingi offers advanced tools for efficient and effective A/B testing.

- Incrementality experiments differ from A/B tests by focusing on the overall impact of marketing efforts.

What is Landing Page AB Testing?

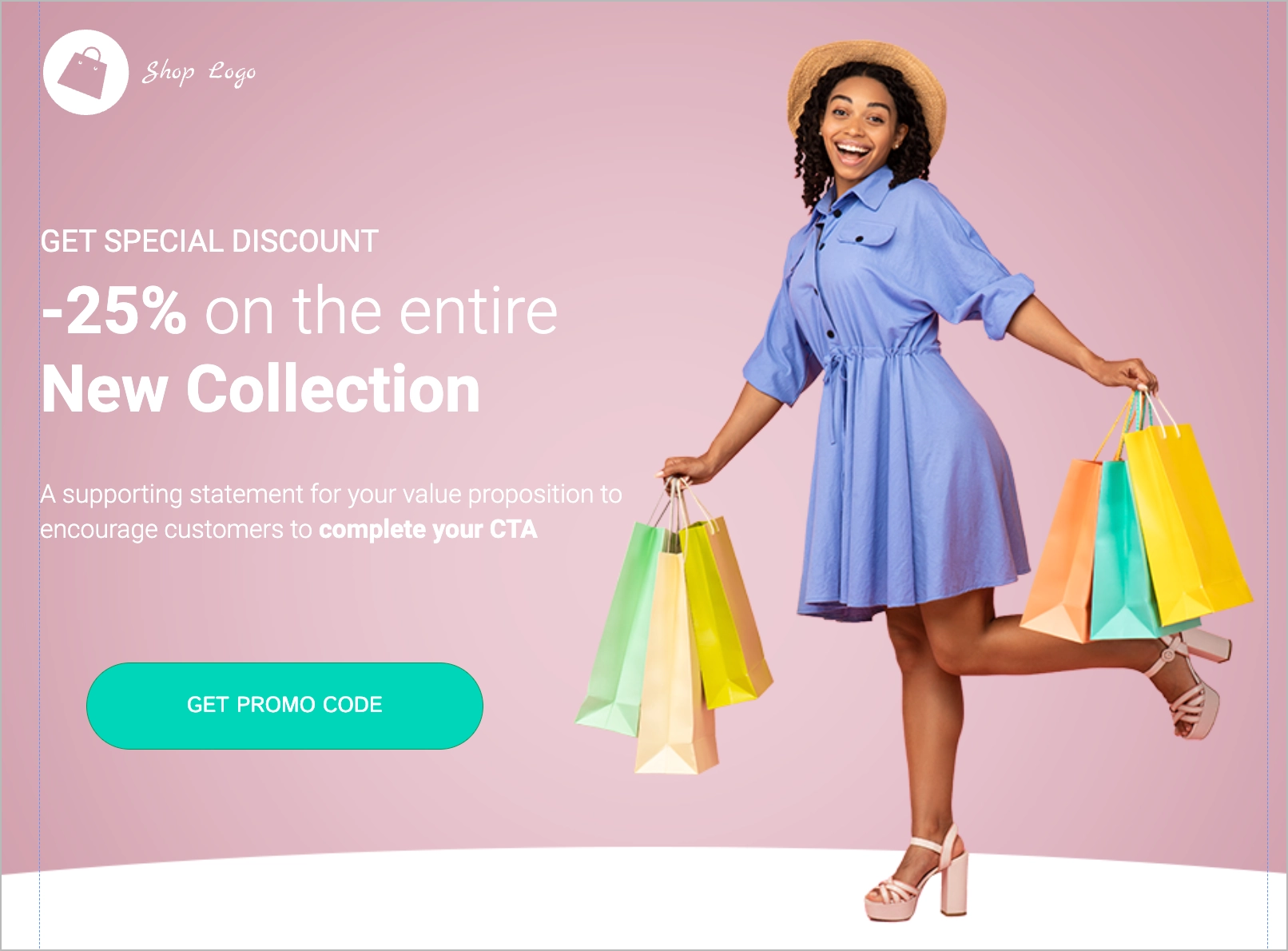

Landing page A/B testing is a method used to compare two versions of a landing page to determine which one performs better. This process involves creating two variants of a landing page, often referred to as version A and version B, and distributing website traffic between them to see which one drives more conversions. To visualize it, take a look at the below landing page:

Let’s assume it’s the variant “A”. Remember it. Now, take a look at variant “B” of this page:

See the difference? The buttons on both pages are different in terms of copy, background color, border, and some other subtle design parameters. A/B testing of these pages can show which one (if any) converts better. As the button is the only difference, we can be almost sure that possible differences in conversion level are due exactly to differences in the design of this element. This is, in a nutshell, the idea of how A/B testing works.

Boost your landing page conversions—start A/B testing with Landingi today!

What is Landing Page Split Testing?

Split testing (or split URL testing) is often used interchangeably with A/B testing, but sometimes it refers to a specific function in A/B testing tools that allows you to change the proportion of traffic directed to each tested variant. For example, you may wish to send 70% of traffic to Variant A, and 30% to Variant B. In simple A/B testing traffic distribution is automatized and depends fully on the algorithms of your testing tool or platform.

You may ask in what cases setting traffic proportions manually is useful. Here are more common reasons for this:

- Risk Mitigation: If one variant (e.g., Variant A) is the current best-performing or default version, directing a larger proportion of traffic to it (e.g., 70%) ensures that any potential negative impact on overall performance is minimized. This is particularly important when the stakes are high, such as during peak sales periods or major marketing campaigns.

- Faster Data Collection for New Variants: When testing a new variant (e.g., Variant B) that might significantly improve performance, sending more traffic to it (e.g., 30%) can quickly gather enough data to determine its effectiveness. This accelerated data collection helps in making quicker decisions about whether to fully implement the new variant or not.

- Resource Allocation: Custom traffic proportions allow for better resource management. For instance, if a variant requires more server resources or customer support, limiting its traffic can prevent overloading systems and ensure a smoother user experience.

- Controlled Experimentation: By adjusting traffic proportions, businesses can control the scope of their experiments. This is useful when testing high-risk or innovative changes, where a gradual rollout and careful monitoring are necessary to avoid significant disruptions.

- Gradual Rollout of Changes: Setting custom traffic proportions enables a phased approach to implementing changes. For example, starting with 10% of traffic directed to a new variant and gradually increasing it as confidence in its performance grows. This approach ensures that any issues can be identified and addressed before a full rollout.

- Strategic Targeting: Custom proportions allow for strategic targeting, where certain user segments might be directed to specific variants based on demographics, behavior, or other criteria. This targeted approach can yield more relevant insights and improve the overall effectiveness of the test.

In summary, setting custom traffic proportions in split testing provides flexibility, reduces risk, and ensures more strategic and controlled experimentation, ultimately leading to better decision-making and optimized performance.

Learn how to optimize your landing page with A/B testing—get started with Landingi!

Which Landing Page Elements Can be AB Tested?

In A/B testing, page variants can differ in elements like headlines, visual elements (images, videos, carousels, effects, etc.), forms, call-to-action buttons, social proof and even overall layouts. By measuring user interactions and conversion rates, marketers can identify which version is more effective at achieving their goals, whether that’s generating leads, making sales, or encouraging sign-ups.

Ready to improve your landing page? Run A/B tests with Landingi now!

What are Key Benefits of Landing Page AB Testing?

The key advantage of A/B testing is its ability to provide data-driven insights. Instead of relying on guesswork, marketers can make informed decisions based on real user behavior. This leads to continuous improvement and optimization of landing pages, ultimately enhancing user experience and increasing conversion rates.

7 Steps for Landing Page AB Testing to Improve Conversion Rates

Landing page testing consists of several steps that help you systematically optimize your pages and improve conversion rates. By following them, you can identify what resonates best with your audience and make data-driven improvements.

1. Defining Goals and Metrics

Firstly, define clear goals and metrics. It will help you understand what you aim to achieve and how you will measure success. Goals could be increasing sign-ups, improving click-through rates, or boosting sales. Metrics are the specific data points you’ll track to evaluate performance.

Example: You can take as a goal improving conversion rate on mobile devices, while as a measure number of conversions on mobile per week.

Pro-Tip: Choose one primary metric to focus on to avoid diluting the impact of your test.

Mistake to Avoid: Avoid setting too many goals or metrics, which can complicate analysis and dilute focus.

Maximize your landing page performance—A/B test with Landingi today!

2. Creating Hypotheses

Secondly, create a few hypotheses about what changes might improve your conversion rates. A well-defined hypothesis guides your test design and ensures you have a clear idea of what you’re testing and why. Hypotheses should be based on data, user feedback, or insights from previous tests.

Example: A correct hypothesis may sound: “Changing the headline from ‘Sign Up Now’ to ‘Get Exclusive Updates’ will increase newsletter sign-ups by 15% over a two-week period“.

Pro-Tip: Make your hypotheses specific and measurable to easily evaluate results.

Mistake to Avoid: Avoid setting too many goals or metrics, which can complicate analysis and dilute focus.

3. Designing Variations

Thirdly, design at least two versions (a control version and another one) of your landing page based on your hypotheses. These variations will be compared to see which performs better. Ensure that the changes are clear and noticeable, yet not too drastic to confuse visitors.

Example: As a control version, you can take the existing version of your landing page, while the new version should be modified in the element you aim to test. If you’d like to check the efficiency of your lead generation form, you should modify the form on the new version (e.g. limit form fields, layout, copy or overall form length).

Pro-Tip: Limit changes to one element per test to isolate its impact on performance.

Mistake to Avoid: Avoid making too many changes at once, which can make it difficult to identify which change impacted results.

4. Splitting Traffic

Fourthly, split website traffic between the different versions of your landing page. This ensures a fair comparison by exposing each variant to an appropriate number of visitors. Most frequently you should use equal shares, but in some specific cases, it is advisable to split the traffic in other proportions (see the paragraph about split testing).

Example: If you know everything about your current landing page performance (also which elements most influence conversion rate), it may be reasonable to direct more traffic on the new variant, which gives you results and allows you to implement valuable changes quicker.

Pro-Tip: Ensure a random and even distribution of traffic for accurate results.

Mistake to Avoid: Avoid uneven traffic distribution, which can skew the results and lead to incorrect conclusions.

See how A/B testing improves conversions—start testing with Landingi!

5. Running the Test

Fifthly, run the test for a sufficient period to collect enough data. The duration should be long enough to account for variations in visitor behavior, such as weekdays versus weekends.

Example: Let the test run for at least one week to gather sufficient data. If you have low traffic, your test should run longer.

Pro-Tip: Avoid making changes during the test period to ensure consistency.

Mistakes to avoid: Do not make any changes to your website or test variables during the testing period. Alterations can skew results and invalidate the test.

6. Analyzing Results

Sixtly, analyze results by reviewing the data collected to determine which variation performed better. Use statistical tools to compare conversion rates and other relevant metrics based only on the statistically significant results

Example: Compare the conversion rates of both versions to see which performed better. Try to identify the reason why the winning landing page proved better. Compare your conclusions with the results of similar case studies available online to increase the likelihood your interpretation is accurate.

Pro-Tip 1: Break down your data by different audience segments, such as demographics, traffic sources, or user behavior. This can reveal insights that might be hidden in the aggregate data and help tailor future optimizations.

Pro-Tip 2: Examine not only conversion rates, but also other relevant metrics like bounce rate, time on site, and customer lifetime value. Such a holistic view provides a more comprehensive understanding of user behavior and the impact of each variation.

Mistakes to avoid: Be cautious of confirmation bias – interpreting data in a way that confirms your preconceptions. Analyze the data objectively and consider all possible explanations for the observed results.

7. Implementing the Winning Variation

Seventhly, just implement the winning variation into the live landing page. Document the results and learnings from this test to inform future A/B tests.

Example: If the new headline increased sign-ups, update your landing page accordingly. In other words, make the winning variant your live webpage.

Pro-Tip: Continuously test new hypotheses to keep optimizing your landing page.

Each step of the test builds on the previous one, creating a structured approach to achieving better results and higher engagement from your audience.

By performing regular landing page tests, you can systematically improve conversion rates, making data-driven decisions that enhance your marketing efforts.

Optimize every element of your landing page—run A/B tests with Landingi!

Landing Page AB Testing Examples

Landing Page AB testing is a common procedure for business companies across different industries. Below you will find several exemplary applications taken from the real case studies published throughout the web. Explore and learn what it looks like in digital marketing practice and what kind of effects you can expect.

1. Bukvybag

Tested Element: Landing page headline

Background (Challenge): Bukvybag, a company specializing in women’s bags, was struggling with a low conversion rate on their home page. They suspected that their existing headline, “Versatile bags & accessories,” was not effectively capturing the interest of their target audience.

A/B Testing Scope and Variants: To tackle this issue, Bukvybag utilized a dynamic content feature to run a split test on their home page headline. The goal was to identify which headline would resonate best with potential customers and drive higher conversion rates. They tested multiple variations of the headline, each emphasizing a different value proposition:

- Variant A: “Stand out from the crowd with our fashion-forward and unique bags”

- Variant B: “Discover the ultimate travel companion that combines style and functionality”

- Variant C: “Premium quality bags designed for exploration and adventure”

Outcomes: The A/B testing revealed a clear winner, leading to a 45% increase in orders. The significant uplift in conversions demonstrated that the chosen headline was more effective in engaging their audience. The results reached statistical significance, confirming that the improvement was not due to random chance and that the new headline could sustain the enhanced conversion rate over time.

Based on a story by: Barbara Bartucz (OptiMonk)

Turn data into action—A/B test your landing page with Landingi now!

2. Going

Tested Element: Copy for call-to-action button

Background (Challenge): Going faced a significant challenge in effectively presenting their subscription plans. Their conventional strategy focused on enticing page visitors to register for a free, limited plan with the hope of later upselling them to a premium option. However, this approach failed to adequately convey the full value of Going’s premium offerings, resulting in disappointing conversion rates.

A/B Testing Scope and Variants: To tackle this issue, Going utilized Unbounce’s A/B testing tools to refine their call to action (CTA). They experimented with two different CTAs on their homepage: “Sign up for free” versus “Trial for free”.

Outcomes: This seemingly minor adjustment – just a three-word change – aimed to better emphasize the advantages of the comprehensive premium plan by presenting a free trial period. This made the value proposition more transparent and immediate for potential customers. The new “Trial for free” CTA resulted in reduced form abandonment rate, which gave in turn a 104% increase in trial starts month-over-month. This substantial improvement not only boosted conversion rates from paid channels but also, for the first time, outperformed organic traffic.

Based on a story by: Paul Park (Unbounce)

Follow A/B testing best practices—start optimizing your landing page with Landingi!

3. WorkZone

Tested Element: Customer testimonial logos placed next to the lead generation form

Background (Challenge): WorkZone, a US-based software company specializing in project management solutions and documentation collaboration tools, needed to enhance its lead conversion rates. To bolster its brand reputation, WorkZone featured a customer review section next to the demo request form on its lead generation page. However, the colorful customer testimonial logos distracted visitors from completing the form, negatively impacting conversions.

A/B Testing Scope and Variants: To address this issue, WorkZone conducted an A/B test to determine if changing the companies’ logos in customer testimonials from their original color to black and white would reduce the distraction and increase the number of demo requests. The test ran for 22 days, comparing the original colorful logos (control) with the black-and-white versions (variation).

Outcomes: The black-and-white logos brought 34% more form submissions than the original version. With a statistical significance of 99%, the conclusion was simple: redesigned logos effectively reduce distraction and enhance lead conversion. They were implemented instantly after tests.

Based on a story by: Astha Khandelwal (VVO)

Ready to discover what works best? Run A/B tests with Landingi today!

4. Orange

Tested Element: Lightbox/Overlay

Background (Challenge): Orange’s mobile subscriptions are a vital part of their business strategy. The primary goal was to enhance the website’s lead collection rate and increase the number of active mobile users. Data indicated that the subscription page was the key source of registered leads, prompting a focus on optimizing this page to boost lead collection overall.

A/B Testing Scope and Variants: Previously, Orange used an exit-intent overlay (kind of a lightbox) on the desktop version of their subscription page to capture leads before users left the site. The A/B test aimed to determine if implementing a time-based overlay on the mobile version would improve lead collection. The test involved triggering an overlay after 15 seconds for users who had not yet provided their contact information, comparing this variant against the standard page without the overlay.

Outcomes: The results were remarkable, with a 106.29% increase in the lead collection rate. The experiment demonstrated that time-based overlays on mobile devices significantly outperform desktop exit triggers, leading to an enhanced conversion rate.

Based on a story by: Oana Predoiu (Omniconvert)

5. InsightSquared (now: Mediafly)

Tested element: Form fields on a lead generation form

Background (challenge): InsightSquared, a B2B company focused on simplifying sales forecasting, faced a challenge with their lead generation forms. The original form appeared overly lengthy due to several optional fields, which seemed to intimidate potential leads and led to a higher abandonment rate. Despite these fields being optional, their presence was deterring users from completing the form.

A/B testing scope and variants: The company decided to test a streamlined version of their form by removing the optional fields, aiming to make the form less daunting and more user-friendly. The key metric was the percentage of users who provided their phone numbers, with an initial observation that only 15% were doing so. The test involved deploying the new, simplified form on the eBook download page to see if the reduction in fields would lead to a higher conversion rate.

Outcomes: The streamlined form significantly improved user engagement, resulting in a remarkable 112% increase in conversions. This confirmed that simplifying the form by removing optional fields made it more approachable, thereby encouraging more users to complete it.

Based on a story by: Willem Drijver

Unlock higher conversions—start A/B testing your landing page with Landingi!

How do Landing Page Incrementality Experiments Differ from A/B Experiments?

Landing page incrementality experiments measure the true additional impact of a whole marketing campaign or a set of marketing interventions by comparing the performance of a test group exposed to the intervention (or campaign) against a control group over a longer period, whereas A/B experiments compare two or more versions of a landing page (with slight changes in individual, not multiple elements) to determine which performs better in a short-term, controlled environment. Let’s put it more precisely in the table:

| Aspect | A/B Experiments | Incrementality Experiments |

| Objective | Compare two or more versions to identify the best performer | Measure the additional impact of a specific campaign or a set of marketing interventions. |

| Time Frame | Short-term, immediate results | Long-term, assessing sustained impact |

| Insight Type | Determine which version performs better | Determine the net effect of the whole campaign (or at least a set of actions) |

| Focus | Specific page elements and their performance | Overall performance metrics and their incremental change |

| Example Use Case | Testing different headlines or call-to-action buttons | Assessing the impact of a pricing policy of a winter sale |

What is the Best A/B Testing Tool for Landing Pages?

The best A/B testing tool for landing pages is Landingi, as along with its supersimple interface, it offers plenty of functions unavailable in most of its competitors’ tools like Unbounce or Carrd. Apart from simple A/B tests comparing two different variations of your landing page (e.g., two identical pages with different calls to action or headlines), you can perform in the platform, among others:

- multivariate testing (A/B/x testing) – it allows you to create several variants of your landing page to run one comprehensive test of multiple changes simultaneously,

- split testing – refers to comparing the performance of two or more totally different pages (not the variations of one page) by sending traffic – in equal (or custom), not random proportions – to the same URL to determine which page converts better (with this you will quicker determine which design strategy brings better outcomes).

An additional benefit is having testing tools integrated into the landing page building platform, which is not only convenient but also ensures compatibility and reduces the cost spent on digital marketing tools.

Run Landing Page AB Test with Landingi

Though Landingi is primarily a popular page builder, it comes with a set of tools for conversion rate optimization – from a comprehensive landing page experiment suite (including multivariate testing and landing page split testing) through user behavior tracking to multi-purpose AI tools. With this at your hand, as well as with tons of ready-to-use templates and integrations, you can build not only highly appealing but also successful landing pages that reach your target audience more effectively.

For those looking to optimize their ad spend, Landingi also supports experimenting with pages related to your campaigns performed in Google Ads. A/B testing, in this case, helps determine which page layout drives more conversions, ensuring your ad budget is used effectively.

Landingi is also a great choice for those without previous landing page A/B testing experience, as its testing features are available for 7 days for free, which is perfect for familiarizing with the idea based on practice. Just sign up and try it out!